Method Overview

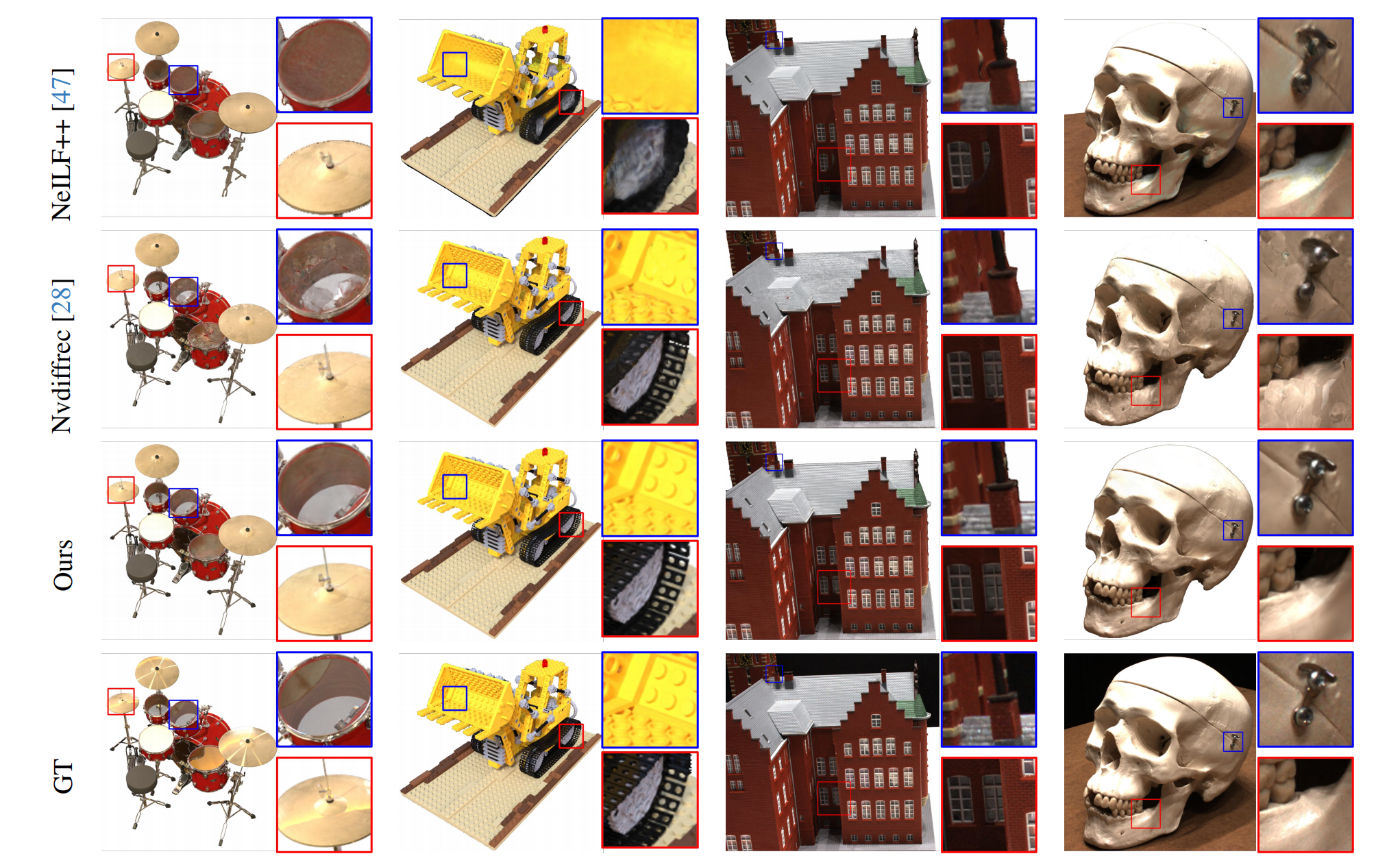

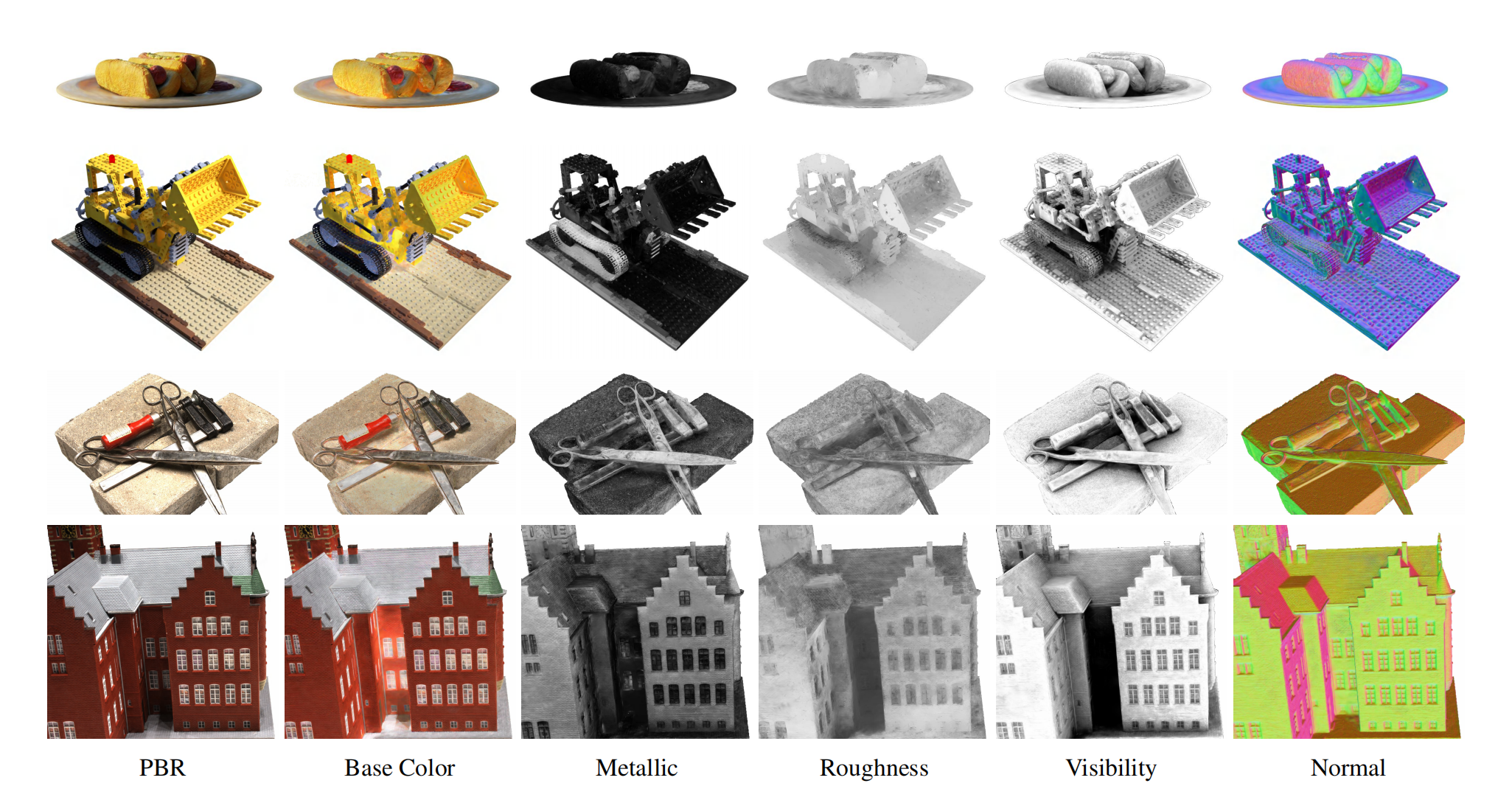

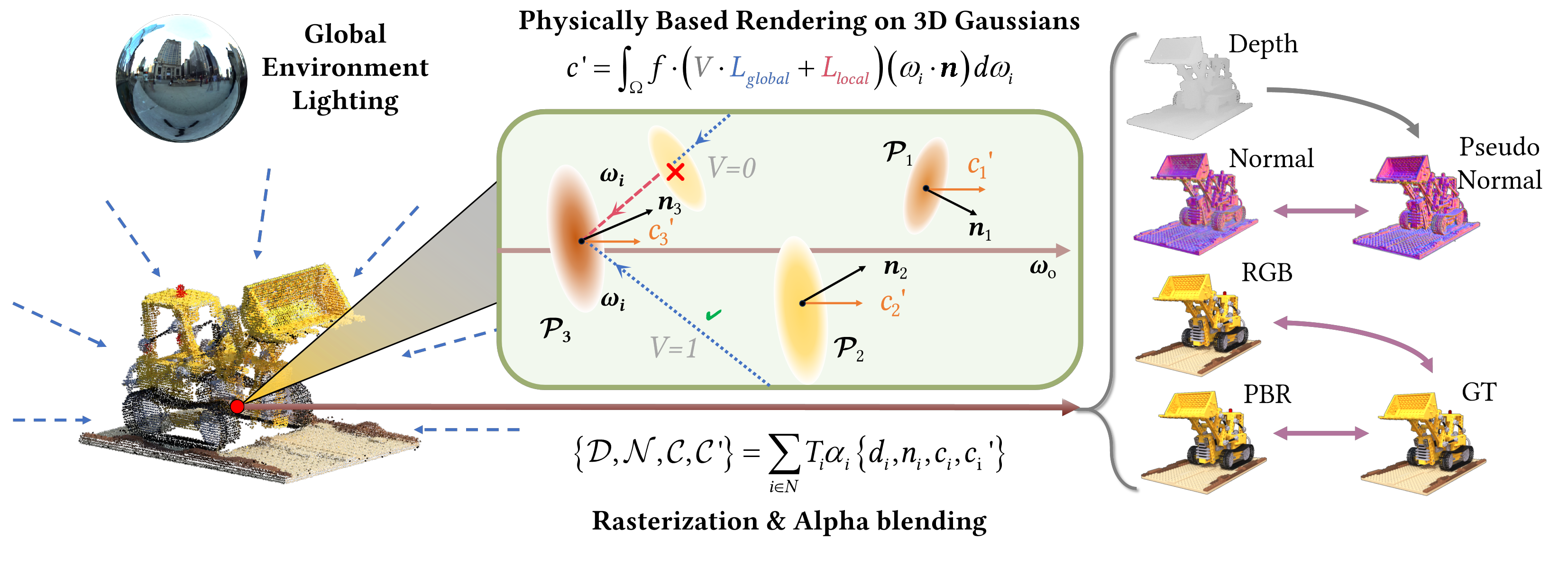

The proposed differentiable rendering pipeline. Starting with a collection of 3D Gaussians that embody geometry, material, and lighting attributes, we first execute the rendering equation at Gaussian level to determine the outgoing radiance of a designated viewpoint. Following this, we proceed to render the corresponding features by employing rasterization coupled with alpha blending, thereby producing the vanilla color map, the physically based rendered color map, the depth map, the normal map, etc. To optimize relightable 3D Gaussians, we utilize the ground truth image and the pseudo normal map derived from the rendered depth map for supervision.

- A material and lighting decomposition scheme for 3D Gaussian Splatting, where normal, BRDF, and incident lights are assigned and optimized for each 3D Gaussian point.

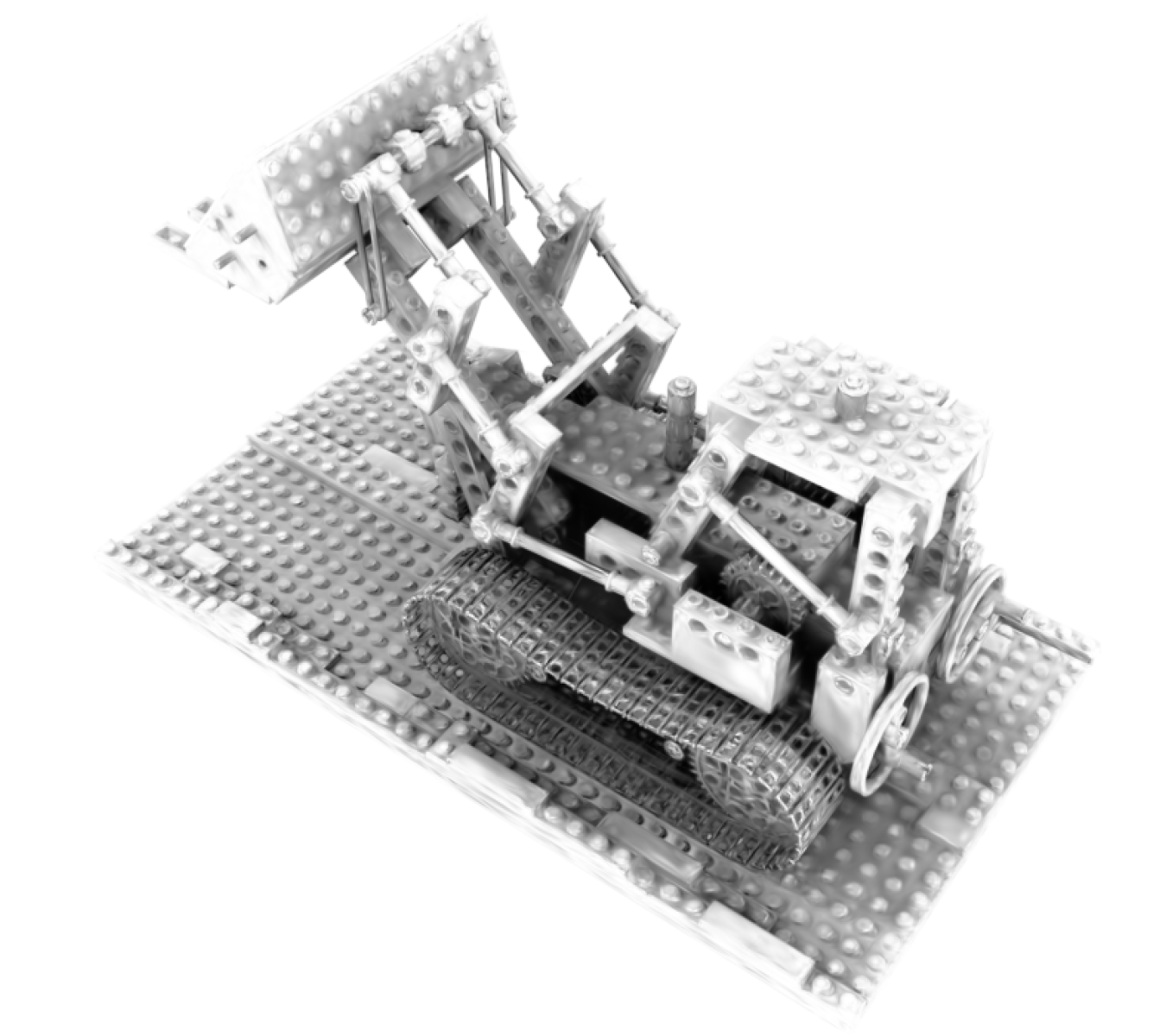

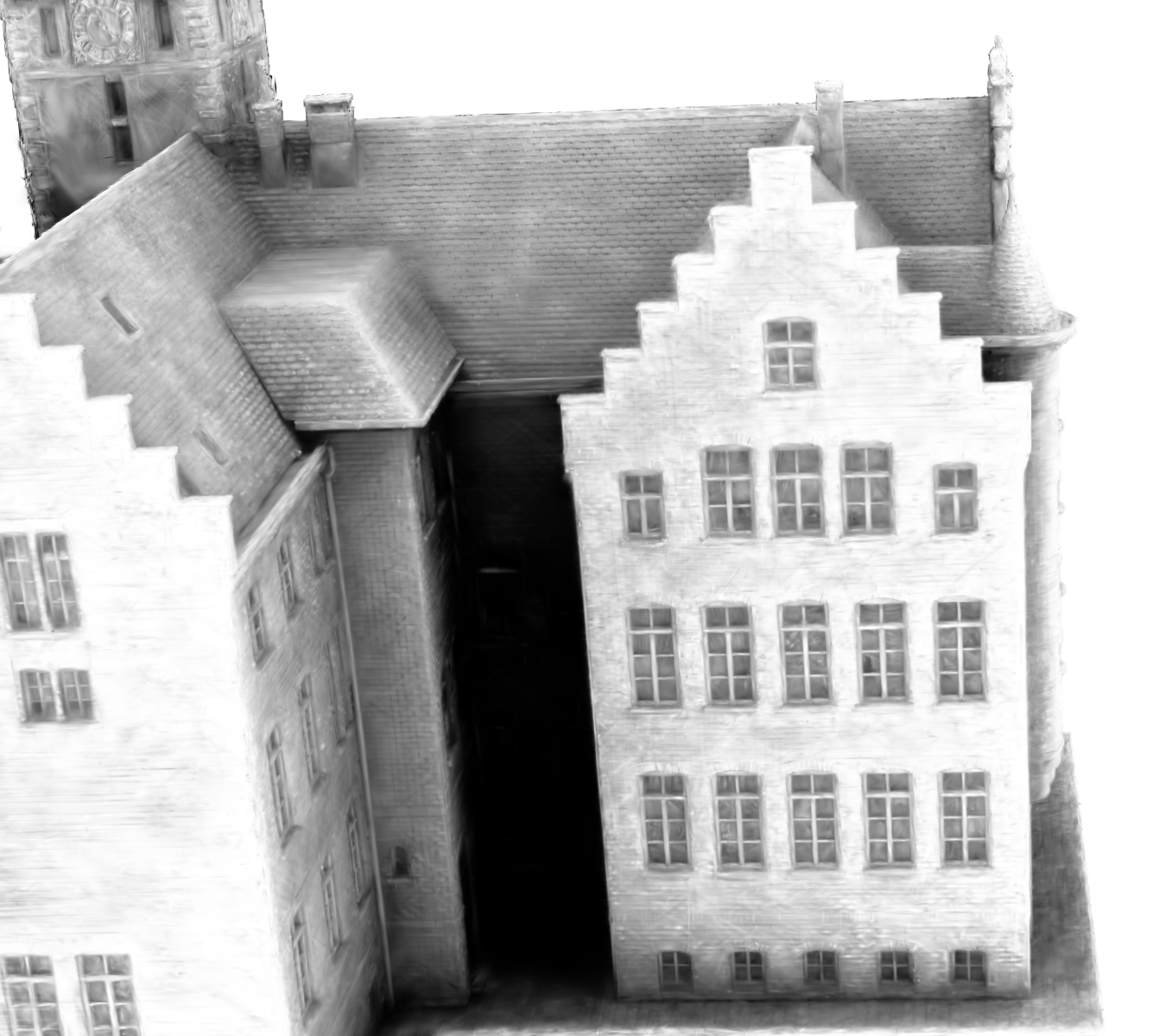

- A novel point-based ray-tracing approach based on bounding volume hierarchy(BVH), enabling efficient visibility baking of every 3D Gaussian point and rendering of a 3D scene with realistic shadow effect.

- A comprehensive graphics pipeline solely based on a discretized point representation, supporting relighting, editing, and ray tracing of a reconstructed 3D point cloud.